Exam Details

Exam Code

:AZ-204Exam Name

:Developing Solutions for Microsoft AzureCertification

:Microsoft CertificationsVendor

:MicrosoftTotal Questions

:532 Q&AsLast Updated

:Jun 24, 2025

Microsoft Microsoft Certifications AZ-204 Questions & Answers

-

Question 11:

You need to resolve a notification latency issue.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Set Always On to true.

B. Ensure that the Azure Function is using an App Service plan.

C. Set Always On to false.

D. Ensure that the Azure Function is set to use a consumption plan.

-

Question 12:

You need to ensure that the solution can meet the scaling requirements for Policy Service. Which Azure Application Insights data model should you use?

A. an Application Insights dependency

B. an Application Insights event

C. an Application Insights trace

D. an Application Insights metric

-

Question 13:

You need to ensure the security policies are met.

What code do you add at line CS07 of ConfigureSSE.ps1?

A. -PermissionsToKeys create, encrypt, decrypt

B. -PermissionsToCertificates create, encrypt, decrypt

C. -PermissionsToCertificates wrapkey, unwrapkey, get

D. -PermissionsToKeys wrapkey, unwrapkey, get

-

Question 14:

You need to resolve the capacity issue. What should you do?

A. Convert the trigger on the Azure Function to an Azure Blob storage trigger

B. Ensure that the consumption plan is configured correctly to allow scaling

C. Move the Azure Function to a dedicated App Service Plan

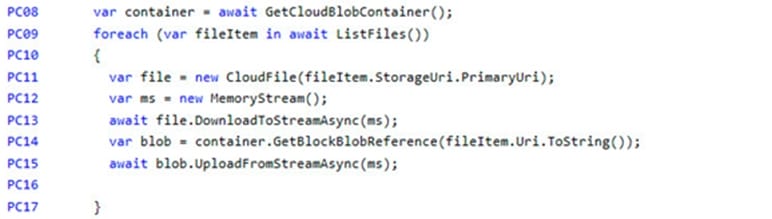

D. Update the loop starting on line PC09 to process items in parallel

-

Question 15:

You need to resolve the log capacity issue. What should you do?

A. Create an Application Insights Telemetry Filter

B. Change the minimum log level in the host.json file for the function

C. Implement Application Insights Sampling

D. Set a LogCategoryFilter during startup

-

Question 16:

You need to implement a solution to resolve the retail store location data issue.

Which three Azure Blob features should you enable? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Soft delete

B. Change feed

C. Snapshots

D. Versioning

E. Object replication

F. Immutability

-

Question 17:

You need to ensure receipt processing occurs correctly. What should you do?

A. Use blob properties to prevent concurrency problems

B. Use blob SnapshotTime to prevent concurrency problems

C. Use blob metadata to prevent concurrency problems

D. Use blob leases to prevent concurrency problems

-

Question 18:

You need to secure the Azure Functions to meet the security requirements.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Store the RSA-HSM key in Azure Key Vault with soft-delete and purge-protection features enabled.

B. Store the RSA-HSM key in Azure Blob storage with an immutability policy applied to the container.

C. Create a free tier Azure App Configuration instance with a new Azure AD service principal.

D. Create a standard tier Azure App Configuration instance with an assigned Azure AD managed identity.

E. Store the RSA-HSM key in Azure Cosmos DB. Apply the built-in policies for customer-managed keys and allowed locations.

-

Question 19:

You need to audit the retail store sales transactions.

What are two possible ways to achieve the goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. Update the retail store location data upload process to include blob index tags. Create an Azure Function to process the blob index tags and filter by store location.

B. Process the change feed logs of the Azure Blob storage account by using an Azure Function. Specify a time range for the change feed data.

C. Enable blob versioning for the storage account. Use an Azure Function to process a list of the blob versions per day.

D. Process an Azure Storage blob inventory report by using an Azure Function. Create rule filters on the blob inventory report.

E. Subscribe to blob storage events by using an Azure Function and Azure Event Grid. Filter the events by store location.

-

Question 20:

You need to reduce read latency for the retail store solution.

What are two possible ways to achieve the goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. Create a new composite index for the store location data queries in Azure Cosmos DB. Modify the queries to support parameterized SQL and update the Azure function app to call the new Queries.

B. Configure Azure Cosmos DB consistency to strong consistency Increase the RUs for the container supporting store location data.

C. Provision an Azure Cosmos OB dedicated gateway, update blob storage to use the new dedicated gateway endpoint.

D. Configure Azure Cosmos DB consistency to session consistency. Cache session tokens in a new Azure Redis cache instance after every write. Update reads to use the session token stored in Azure Redis.

E. Provision an Azure Cosmos DB dedicated gateway Update the Azure Function app connection string to use the new dedicated gateway endpoint.

Related Exams:

62-193

Technology Literacy for Educators70-243

Administering and Deploying System Center 2012 Configuration Manager70-355

Universal Windows Platform – App Data, Services, and Coding Patterns77-420

Excel 201377-427

Excel 2013 Expert Part One77-725

Word 2016 Core Document Creation, Collaboration and Communication77-726

Word 2016 Expert Creating Documents for Effective Communication77-727

Excel 2016 Core Data Analysis, Manipulation, and Presentation77-728

Excel 2016 Expert: Interpreting Data for Insights77-731

Outlook 2016 Core Communication, Collaboration and Email Skills

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Microsoft exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your AZ-204 exam preparations and Microsoft certification application, do not hesitate to visit our Vcedump.com to find your solutions here.