Exam Details

Exam Code

:DATA-ARCHITECTExam Name

:Salesforce Certified Data ArchitectCertification

:Salesforce CertificationsVendor

:SalesforceTotal Questions

:257 Q&AsLast Updated

:Jul 05, 2025

Salesforce Salesforce Certifications DATA-ARCHITECT Questions & Answers

-

Question 211:

UC has multiple SF orgs that are distributed across regional branches. Each branch stores local customer data inside its org's Account and Contact objects. This creates a scenario where UC is unable to view customers across all orgs.

UC has an initiative to create a 360-degree view of the customer, as UC would like to see Account and Contact data from all orgs in one place.

What should a data architect suggest to achieve this 360-degree view of the customer?

A. Consolidate the data from each org into a centralized datastore

B. Use Salesforce Connect's cross-org adapter.

C. Build a bidirectional integration between all orgs.

D. Use an ETL tool to migrate gap Accounts and Contacts into each org.

-

Question 212:

UC has a classic encryption for Custom fields and is leveraging weekly data reports for data backups. During the data validation of exported data UC discovered that encrypted field values are still being exported as part of data exported. What should a data architect recommend to make sure decrypted values are exported during data export?

A. Set a standard profile for Data Migration user, and assign view encrypted data

B. Create another field to copy data from encrypted field and use this field in export

C. Leverage Apex class to decrypt data before exporting it.

D. Set up a custom profile for data migration user and assign view encrypted data.

-

Question 213:

Universal Containers (UC) is migrating from a legacy system to Salesforce CRM, UC is concerned about the quality of data being entered by users and through external integrations.

Which two solutions should a data architect recommend to mitigate data quality issues?

A. Leverage picklist and lookup fields where possible

B. Leverage Apex to validate the format of data being entered via a mobile device.

C. Leverage validation rules and workflows.

D. Leverage third-party- AppExchange tools

-

Question 214:

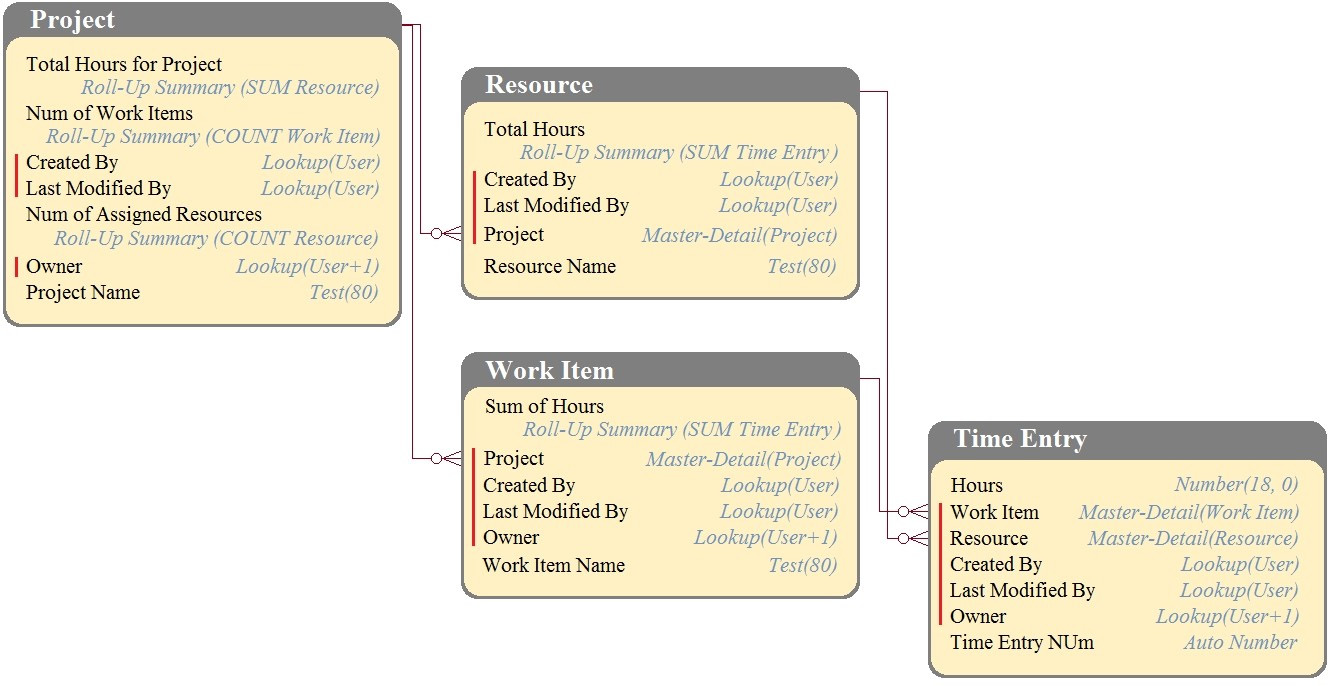

DreamHouse Realty has a data model as shown in the image. The Project object has a private sharing model, and it has Roll-Up summary fields to calculate the number of resources assigned to the project, total hours for the project, and the number of work items associated to the project.

There will be a large amount of time entry records to be loaded regularly from an external system into Salesforce.

What should the Architect consider in this situation?

A. Load all data after deferring sharing calculations.

B. Calculate summary values instead of Roll-Up by using workflow.

C. Calculate summary values instead of Roll-Up by using triggers.

D. Load all data using external IDs to link to parent records.

-

Question 215:

A large automobile company has implemented Salesforce for its sales associates. Leads flow from its website to Salesforce using a batch integration in Salesforce. The batch job converts the leads to Accounts in Salesforce. Customers visiting their retail stores are also created in Salesforce as Accounts.

The company has noticed a large number of duplicate Accounts in Salesforce. On analysis, it was found that certain customers could interact with its website and also visit the store. The sales associates use Global Search to search for customers in Salesforce before they create the customers.

Which option should a data architect choose to implement to avoid duplicates?

A. leverage duplicate rules in Salesforce to validate duplicates during the account creation process.

B. Develop an Apex class that searches for duplicates and removes them nightly.

C. Implement an MDM solution to validate the customer information before creating Salesforce.

D. Build a custom search functionality that allows sales associates to search for customer in real time upon visiting their retail stores.

-

Question 216:

Universal Containers has a rollup summary field on account to calculate the number of contacts associated with an account. During the account load, Salesforce is throwing an "UNABLE _TO_LOCK_ROW" error.

Which solution should a data architect recommend to resolve the error?

A. Defer rollup summary field calculation during data migration.

B. Perform a batch job in serial mode and reduce the batch size.

C. Perform a batch job in parallel mode and reduce the batch size.

D. Leverage Data Loader's platform API to load data.

-

Question 217:

A customer wants to maintain geographic location information including latitude and longitude in a custom object. What would a data architect recommend to satisfy this requirement?

A. Create formula fields with geolocation function for this requirement.

B. Create custom fields to maintain latitude and longitude information

C. Create a geolocation custom field to maintain this requirement

D. Recommend app exchange packages to support this requirement.

-

Question 218:

Universal Containers (UC) is transitioning from Classic to Lightning Experience.

What does UC need to do to ensure users have access to its notices and attachments in Lightning Experience?

A. Add Notes and Attachments Related List to page Layout in Lighting Experience.

B. Manually upload Notes in Lighting Experience.

C. Migrate Notes and Attachment to Enhanced Notes and Files a migration tool

D. Manually upload Attachments in Lighting Experience.

-

Question 219:

To address different compliance requirements, such as general data protection regulation (GDPR), personally identifiable information (PII), of health insurance Portability and Accountability Act (HIPPA) and others, a SF customer decided to categorize each data element in SF with the following:

Data owner Security Level, such as confidential Compliance types such as GDPR, PII, HIPPA A compliance audit would require SF admins to generate reports to manage compliance.

What should a data architect recommend to address this requirement?

A. Use metadata API, to extract field attribute information and use the extract to classify and build reports

B. Use field metadata attributes for compliance categorization, data owner, and data sensitivity level.

C. Create a custom object and field to capture necessary compliance information and build custom reports.

D. Build reports for field information, then export the information to classify and report for Audits.

-

Question 220:

A customer wishes to migrate 700,000 Account records in a single migration into Salesforce. What is the recommended solution to migrate these records while minimizing migration time?

A. Use Salesforce Soap API in parallel mode.

B. Use Salesforce Bulk API in serial mode.

C. Use Salesforce Bulk API in parallel mode.

D. Use Salesforce Soap API in serial mode.

Related Exams:

201-COMMERCIAL-BANKING-FUNCTIONAL

Salesforce enCino 201 Commercial Banking FunctionalADM-201

Administration Essentials for New AdminsADM-211

Administration Essentials for Experienced AdminADVANCED-ADMINISTRATOR

Salesforce Certified Advanced AdministratorADVANCED-CROSS-CHANNEL

Salesforce Advanced Cross Channel Accredited ProfessionalADX-201

Administrative Essentials for New Admins in Lightning ExperienceADX-271

Salesforce Certified Community Cloud ConsultantAGENTFORCE-SPECIALIST

Salesforce Certified Agentforce SpecialistANC-301

Working with Data and Dashboards in Einstein AnalyticsB2B-COMMERCE-ADMINISTRATOR

Salesforce Accredited B2B Commerce Administrator

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Salesforce exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DATA-ARCHITECT exam preparations and Salesforce certification application, do not hesitate to visit our Vcedump.com to find your solutions here.