Exam Details

Exam Code

:DP-100Exam Name

:Designing and Implementing a Data Science Solution on AzureCertification

:Microsoft CertificationsVendor

:MicrosoftTotal Questions

:564 Q&AsLast Updated

:Apr 14, 2025

Microsoft Microsoft Certifications DP-100 Questions & Answers

-

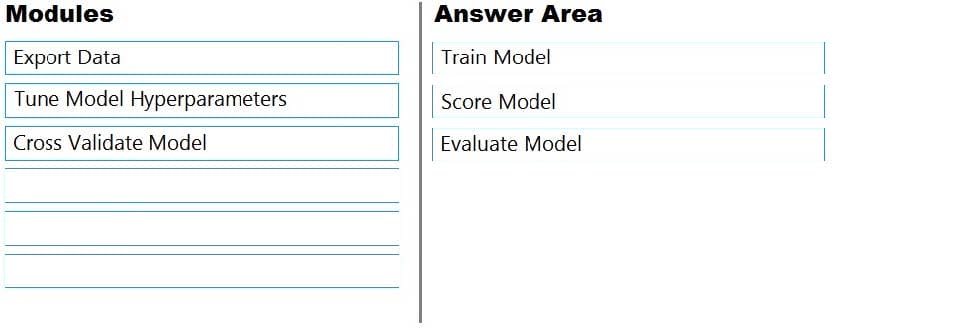

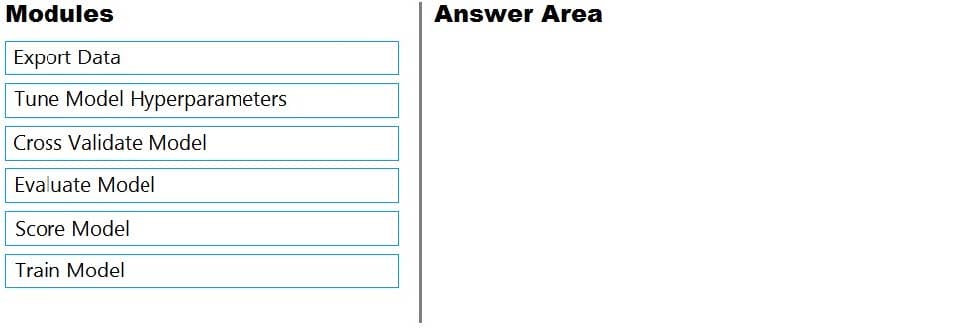

Question 151:

DRAG DROP

You are building an experiment using the Azure Machine Learning designer.

You split a dataset into training and testing sets. You select the Two-Class Boosted Decision Tree as the algorithm.

You need to determine the Area Under the Curve (AUC) of the model.

Which three modules should you use in sequence? To answer, move the appropriate modules from the list of modules to the answer area and arrange them in the correct order.

Select and Place:

-

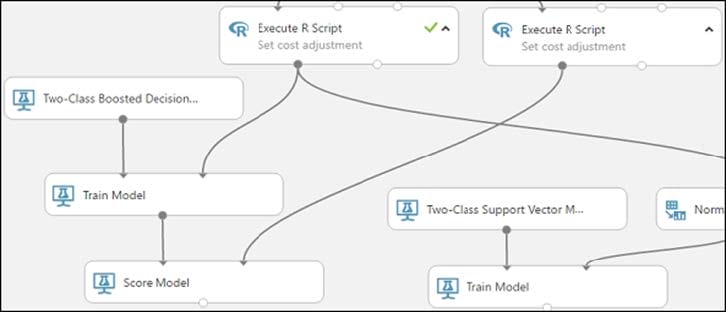

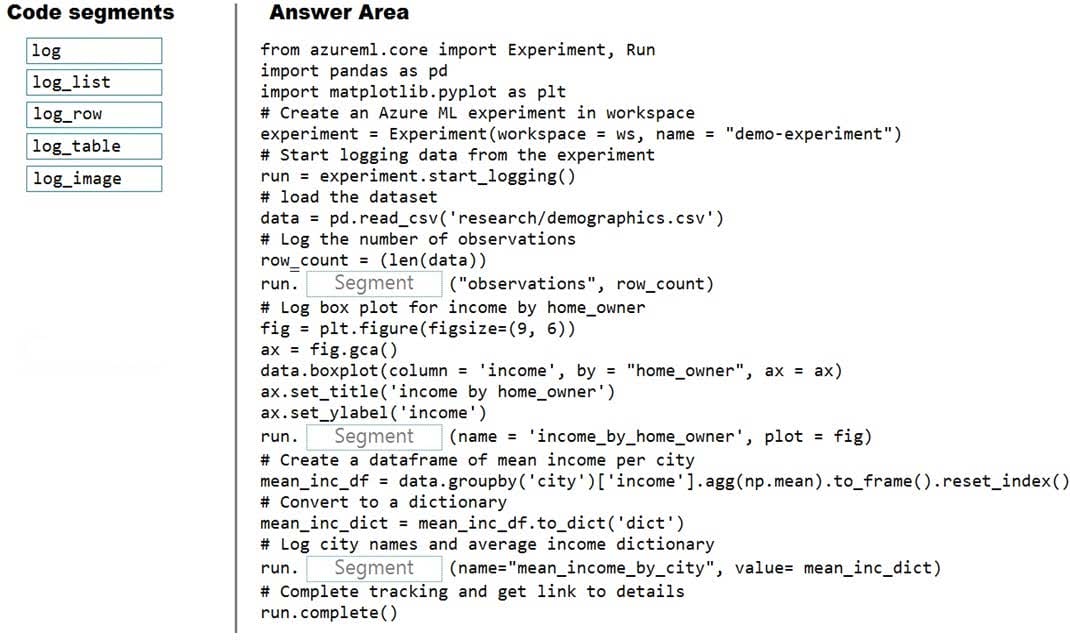

Question 152:

DRAG DROP

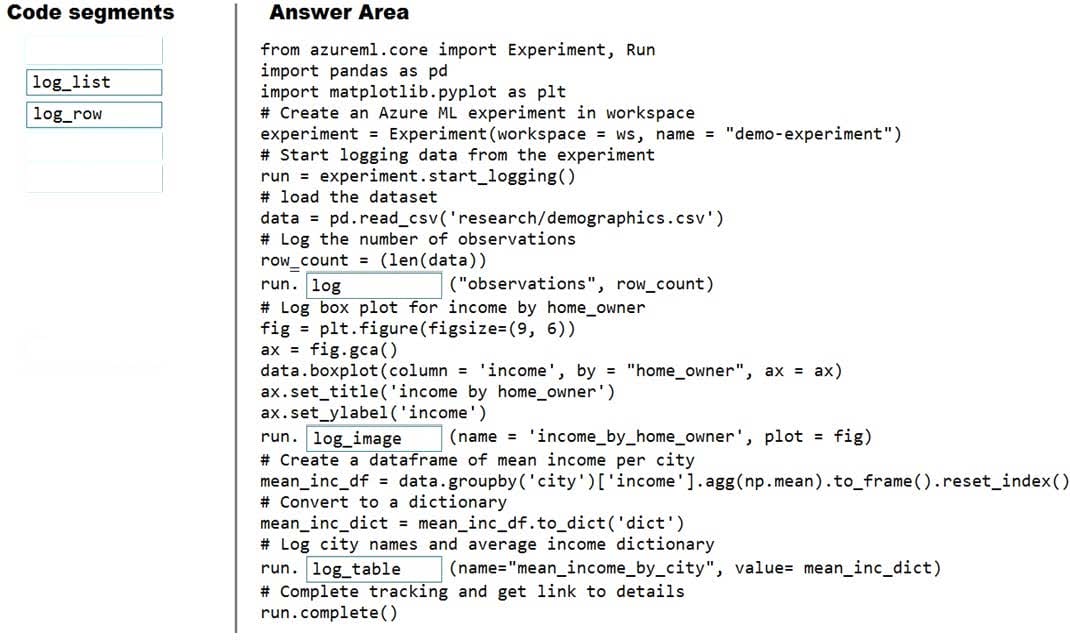

You plan to explore demographic data for home ownership in various cities. The data is in a CSV file with the following format:

age,city,income,home_owner21,Chicago,50000,035,Seattle,120000,123,Seattle,65000,045,Seattle,130000,118,Chicago,48000,0

You need to run an experiment in your Azure Machine Learning workspace to explore the data and log the results. The experiment must log the following information:

1.

the number of observations in the dataset

2.

a box plot of income by home_owner

3.

a dictionary containing the city names and the average income for each city You need to use the appropriate logging methods of the experiment's run object to log the required information. How should you complete the code? To answer, drag the appropriate code segments to the correct locations. Each code segment may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to

view content.

NOTE: Each correct selection is worth one point.

Select and Place:

-

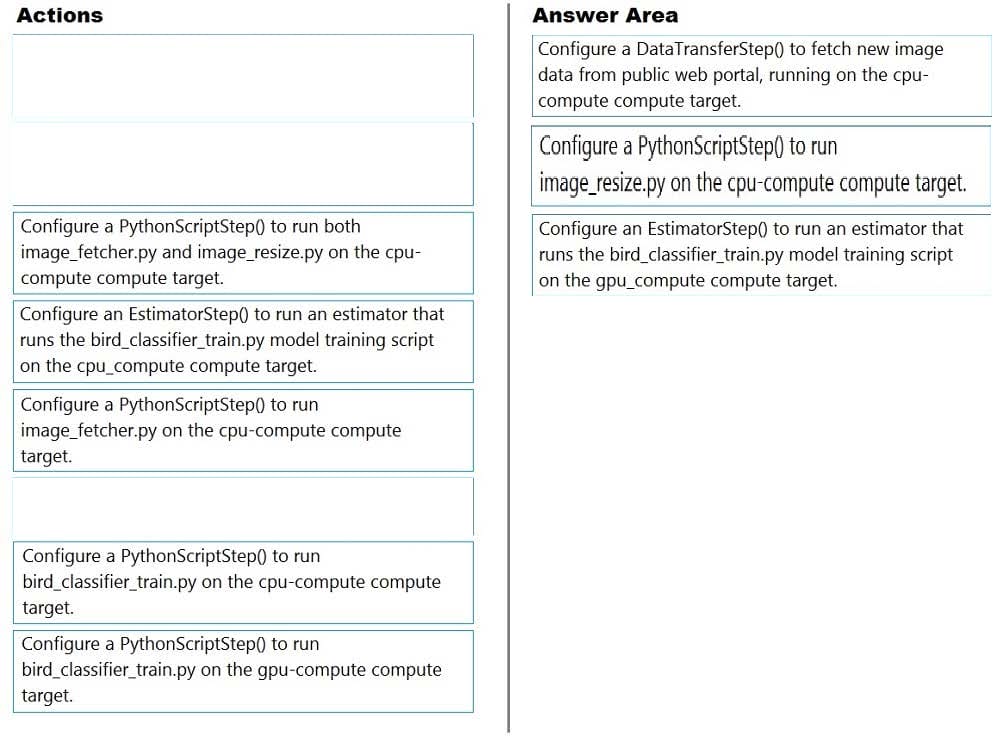

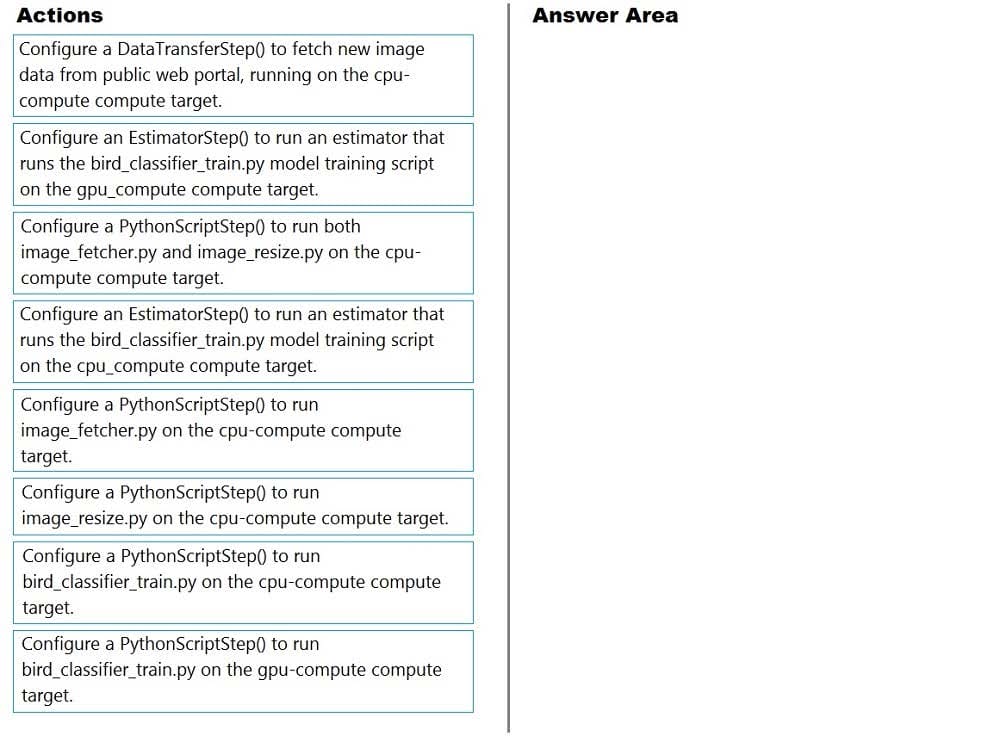

Question 153:

DRAG DROP

You create a multi-class image classification deep learning experiment by using the PyTorch framework. You plan to run the experiment on an Azure Compute cluster that has nodes with GPU's.

You need to define an Azure Machine Learning service pipeline to perform the monthly retraining of the image classification model. The pipeline must run with minimal cost and minimize the time required to train the model.

Which three pipeline steps should you run in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

-

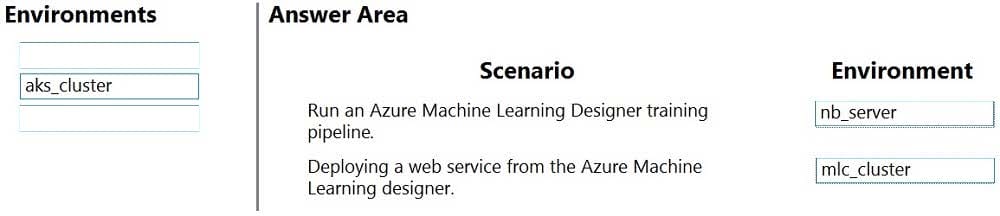

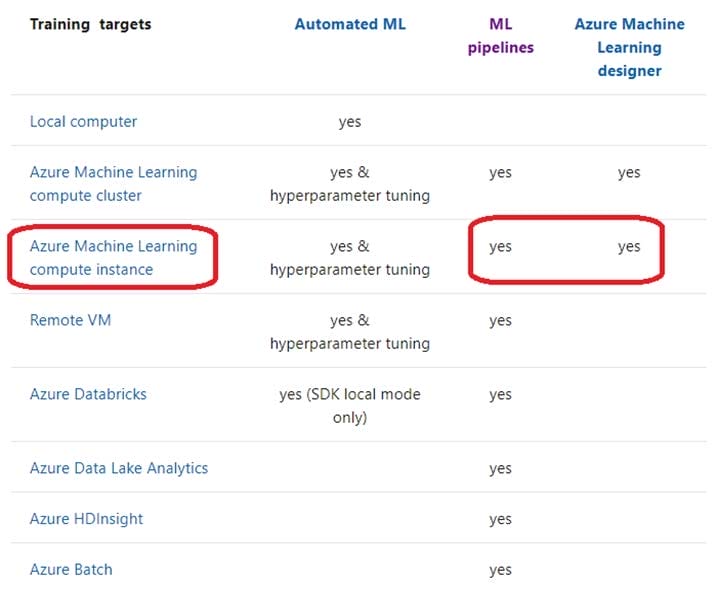

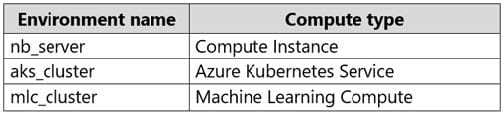

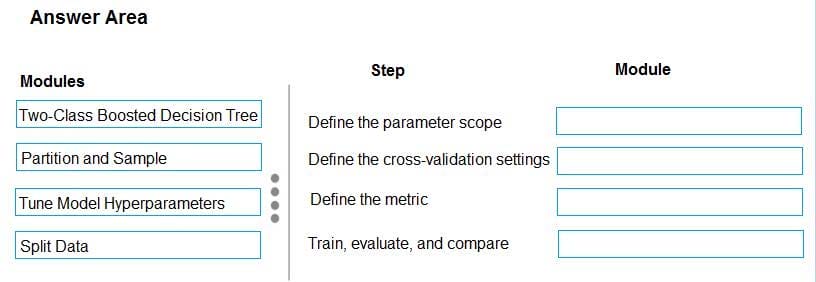

Question 154:

DRAG DROP

An organization uses Azure Machine Learning service and wants to expand their use of machine learning.

You have the following compute environments. The organization does not want to create another compute environment.

You need to determine which compute environment to use for the following scenarios.

Which compute types should you use? To answer, drag the appropriate compute environments to the correct scenarios. Each compute environment may be used once, more than once, or not at all. You may need to drag the split bar between

panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

-

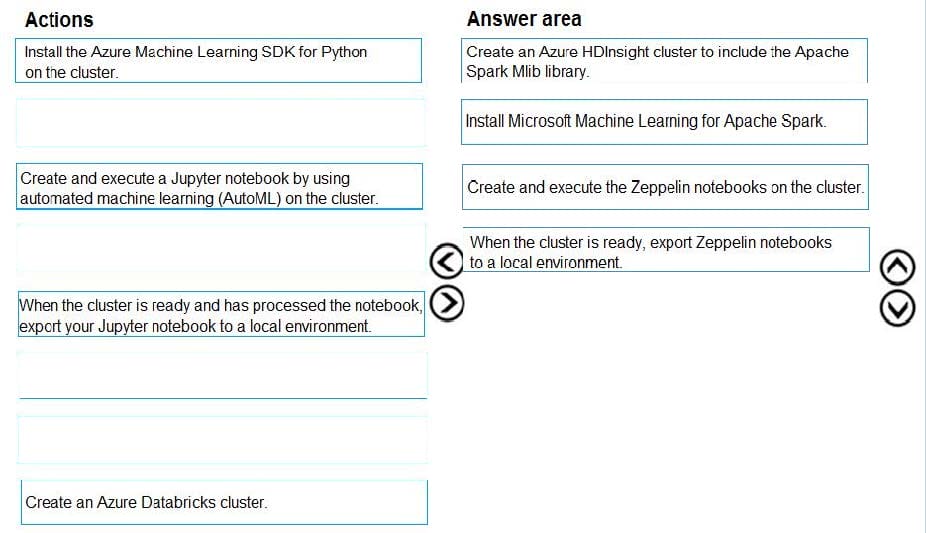

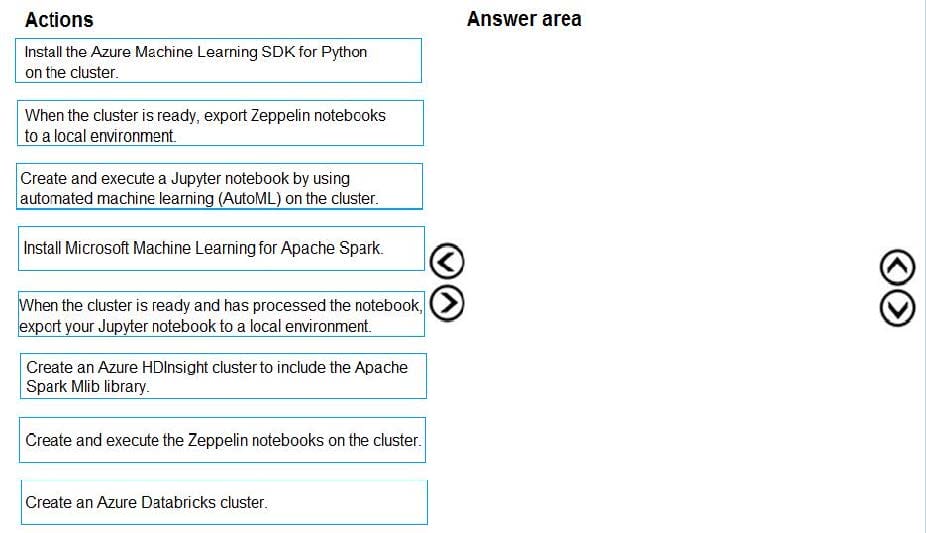

Question 155:

You are building an intelligent solution using machine learning models.

The environment must support the following requirements:

Data scientists must build notebooks in a cloud environment

Data scientists must use automatic feature engineering and model building in machine learning pipelines. Notebooks must be deployed to retrain using Spark instances with dynamic worker allocation. Notebooks must be exportable to be version controlled locally.

You need to create the environment.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

-

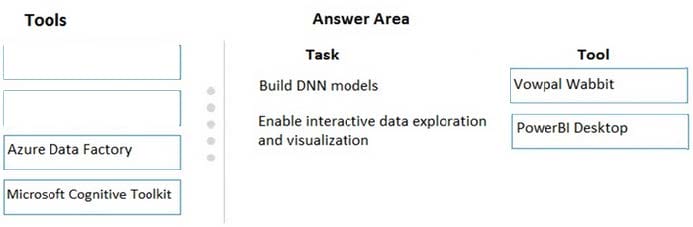

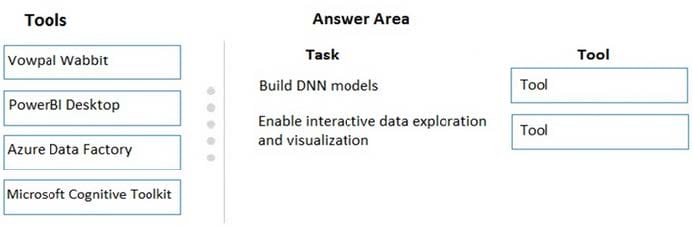

Question 156:

DRAG DROP

You configure a Deep Learning Virtual Machine for Windows.

You need to recommend tools and frameworks to perform the following:

1.

Build deep neural network (DNN) models

2.

Perform interactive data exploration and visualization

Which tools and frameworks should you recommend? To answer, drag the appropriate tools to the correct tasks. Each tool may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view

content.

NOTE: Each correct selection is worth one point.

Select and Place:

-

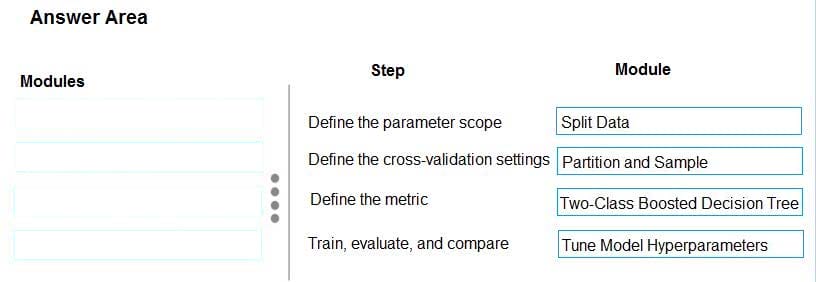

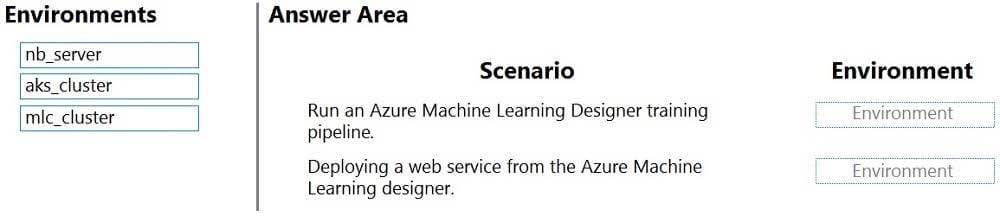

Question 157:

You have a model with a large difference between the training and validation error values.

You must create a new model and perform cross-validation.

You need to identify a parameter set for the new model using Azure Machine Learning Studio.

Which module you should use for each step? To answer, drag the appropriate modules to the correct steps. Each module may be used once or more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

-

Question 158:

DRAG DROP

You are producing a multiple linear regression model in Azure Machine Learning Studio.

Several independent variables are highly correlated.

You need to select appropriate methods for conducting effective feature engineering on all the data.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

-

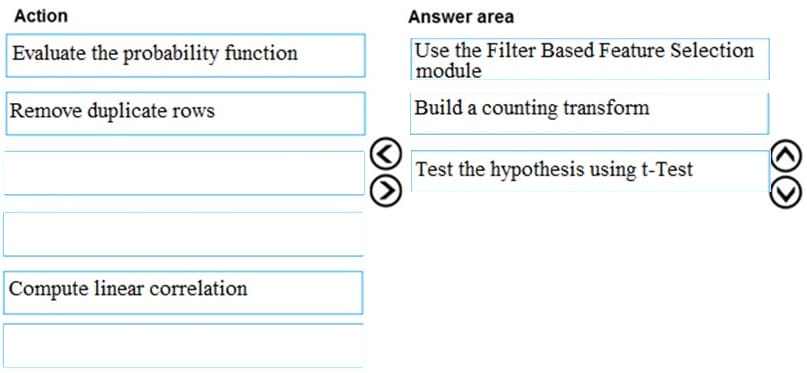

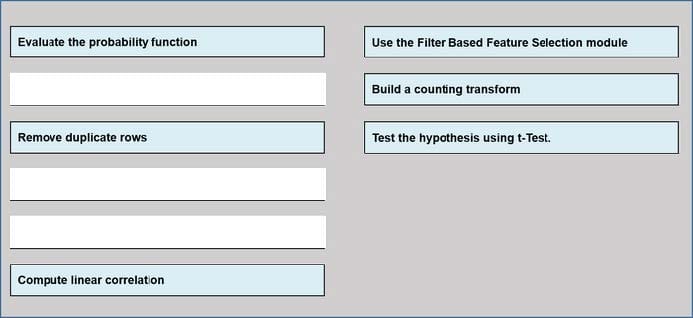

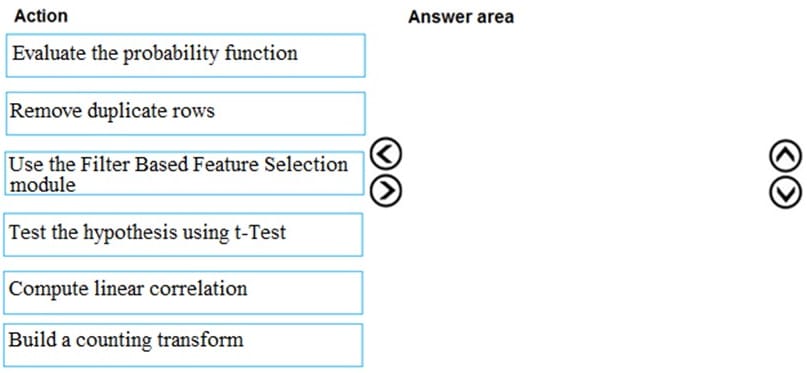

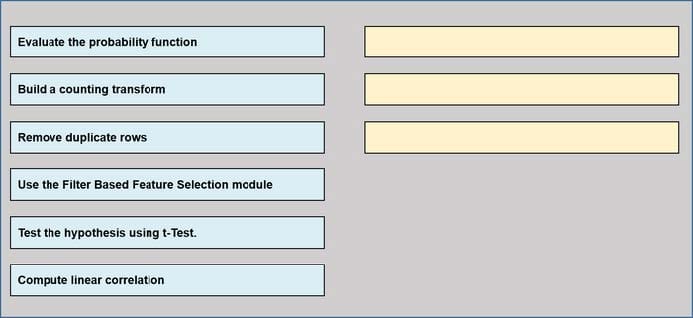

Question 159:

DRAG DROP

You are producing a multiple linear regression model in Azure Machine learning Studio.

Several independent variables are highly correlated.

You need to select appropriate methods for conducting elective feature engineering on all the data;

Which three actions should you perform in sequence? To answer, move the appropriate Actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

-

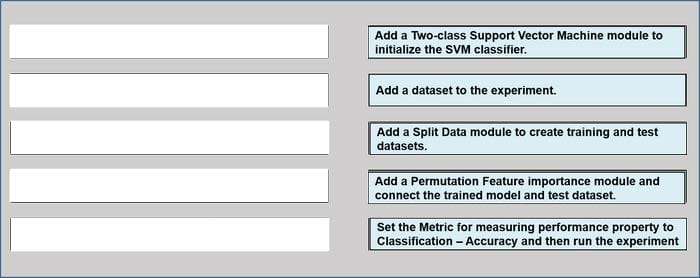

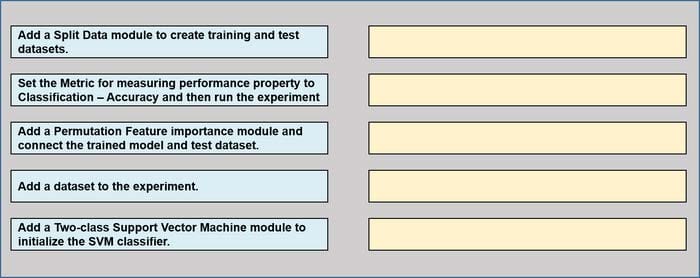

Question 160:

DRAG DROP

You have a dataset that contains over 150 features. You use the dataset to train a Support Vector Machine (SVM) binary classifier.

You need to use the Permutation Feature Importance module in Azure Machine Learning Studio to compute a set of feature importance scores for the dataset.

In which order should you perform the actions? To answer, move all actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

Related Exams:

62-193

Technology Literacy for Educators70-243

Administering and Deploying System Center 2012 Configuration Manager70-355

Universal Windows Platform – App Data, Services, and Coding Patterns77-420

Excel 201377-427

Excel 2013 Expert Part One77-725

Word 2016 Core Document Creation, Collaboration and Communication77-726

Word 2016 Expert Creating Documents for Effective Communication77-727

Excel 2016 Core Data Analysis, Manipulation, and Presentation77-728

Excel 2016 Expert: Interpreting Data for Insights77-731

Outlook 2016 Core Communication, Collaboration and Email Skills

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Microsoft exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DP-100 exam preparations and Microsoft certification application, do not hesitate to visit our Vcedump.com to find your solutions here.