Exam Details

Exam Code

:DP-100Exam Name

:Designing and Implementing a Data Science Solution on AzureCertification

:Microsoft CertificationsVendor

:MicrosoftTotal Questions

:564 Q&AsLast Updated

:Jul 05, 2025

Microsoft Microsoft Certifications DP-100 Questions & Answers

-

Question 181:

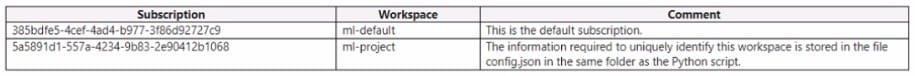

You have the following Azure subscriptions and Azure Machine Learning service workspaces:

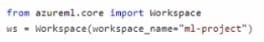

You need to obtain a reference to the ml-project workspace. Solution: Run the following Python code:

Does the solution meet the goal?

A. Yes

B. No

-

Question 182:

You create a training pipeline by using the Azure Machine Learning designer. You need to load data into a machine learning pipeline by using the Import Data component.

Which two data sources could you use? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point

A. Azure Blob storage container through a registered datastore

B. Azure SQL Database

C. URL via HTTP

D. Azure Data Lake Storage Gen2

E. Registered dataset

-

Question 183:

You create an MLflow model

You must deploy the model to Azure Machine Learning for batch inference.

You need to create the batch deployment.

Which two components should you use? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point

A. Compute target

B. Kubernetes online endpoint

C. Model files

D. Online endpoint

E. Environment

-

Question 184:

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You plan to use a Python script to run an Azure Machine Learning experiment. The script creates a reference to the experiment run context, loads data from a file, identifies the set of unique values for the label column, and completes the

experiment run:

from azureml.core import Run

import pandas as pd

run = Run.get_context()

data = pd.read_csv('data.csv')

label_vals = data['label'].unique()

# Add code to record metrics here

run.complete()

The experiment must record the unique labels in the data as metrics for the run that can be reviewed later.

You must add code to the script to record the unique label values as run metrics at the point indicated by the comment.

Solution: Replace the comment with the following code:

run.log_table('Label Values', label_vals)

Does the solution meet the goal?

A. Yes

B. No

-

Question 185:

You create a classification model with a dataset that contains 100 samples with Class A and 10,000 samples with Class B.

The variation of Class B is very high.

You need to resolve imbalances.

Which method should you use?

A. Partition and Sample

B. Cluster Centroids

C. Tomek links

D. Synthetic Minority Oversampling Technique (SMOTE)

-

Question 186:

You use Azure Machine Learning to train a model based on a dataset named dataset1.

You define a dataset monitor and create a dataset named dataset2 that contains new data.

You need to compare dataset1 and dataset2 by using the Azure Machine Learning SDK for Python.

Which method of the DataDriftDetector class should you use?

A. run

B. get

C. backfill

D. update

-

Question 187:

You use an Azure Machine Learning workspace.

You have a trained model that must be deployed as a web service. Users must authenticate by using Azure Active Directory.

What should you do?

A. Deploy the model to Azure Kubernetes Service (AKS). During deployment, set the token_auth_enabledparameter of the target configuration object to true

B. Deploy the model to Azure Container Instances. During deployment, set the auth_enabledparameter of the target configuration object to true

C. Deploy the model to Azure Container Instances. During deployment, set the token_auth_enabledparameter of the target configuration object to true

D. Deploy the model to Azure Kubernetes Service (AKS). During deployment, set the auth.enabledparameter of the target configuration object to true

-

Question 188:

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You plan to use a Python script to run an Azure Machine Learning experiment. The script creates a reference to the experiment run context, loads data from a file, identifies the set of unique values for the label column, and completes the experiment run:

from azureml.core import Runimport pandas as pdrun = Run.get_context()data = pd.read_csv('data.csv')label_vals = data['label'].unique()# Add code to record metrics here run.complete()

The experiment must record the unique labels in the data as metrics for the run that can be reviewed later.

You must add code to the script to record the unique label values as run metrics at the point indicated by the comment.

Solution: Replace the comment with the following code:

run.log_list('Label Values', label_vals)

Does the solution meet the goal?

A. Yes

B. No

-

Question 189:

You use the Azure Machine Learning designer to create and run a training pipeline.

The pipeline must be run every night to inference predictions from a large volume of files. The folder where the files will be stored is defined as a dataset.

You need to publish the pipeline as a REST service that can be used for the nightly inferencing run.

What should you do?

A. Create a batch inference pipeline

B. Set the compute target for the pipeline to an inference cluster

C. Create a real-time inference pipeline

D. Clone the pipeline

-

Question 190:

You have a Jupyter Notebook that contains Python code that is used to train a model.

You must create a Python script for the production deployment. The solution must minimize code maintenance.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Refactor the Jupyter Notebook code into functions

B. Save each function to a separate Python file

C. Define a main() function in the Python script

D. Remove all comments and functions from the Python script

Related Exams:

62-193

Technology Literacy for Educators70-243

Administering and Deploying System Center 2012 Configuration Manager70-355

Universal Windows Platform – App Data, Services, and Coding Patterns77-420

Excel 201377-427

Excel 2013 Expert Part One77-725

Word 2016 Core Document Creation, Collaboration and Communication77-726

Word 2016 Expert Creating Documents for Effective Communication77-727

Excel 2016 Core Data Analysis, Manipulation, and Presentation77-728

Excel 2016 Expert: Interpreting Data for Insights77-731

Outlook 2016 Core Communication, Collaboration and Email Skills

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Microsoft exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DP-100 exam preparations and Microsoft certification application, do not hesitate to visit our Vcedump.com to find your solutions here.