Exam Details

Exam Code

:DP-300Exam Name

:Administering Relational Databases on Microsoft AzureCertification

:Microsoft CertificationsVendor

:MicrosoftTotal Questions

:368 Q&AsLast Updated

:Apr 01, 2025

Microsoft Microsoft Certifications DP-300 Questions & Answers

-

Question 111:

You are designing an anomaly detection solution for streaming data from an Azure IoT hub. The solution must meet the following requirements:

1.

Send the output to an Azure Synapse.

2.

Identify spikes and dips in time series data.

3.

Minimize development and configuration effort. Which should you include in the solution?

A. Azure SQL Database

B. Azure Databricks

C. Azure Stream Analytics

-

Question 112:

You have an Azure Synapse Analytics workspace named WS1 that contains an Apache Spark pool named Pool1.

You plan to create a database named DB1 in Pool1.

You need to ensure that when tables are created in DB1, the tables are available automatically as external tables to the built-in serverless SQL pool.

Which format should you use for the tables in DB1?

A. JSON

B. CSV

C. Parquet

D. ORC

-

Question 113:

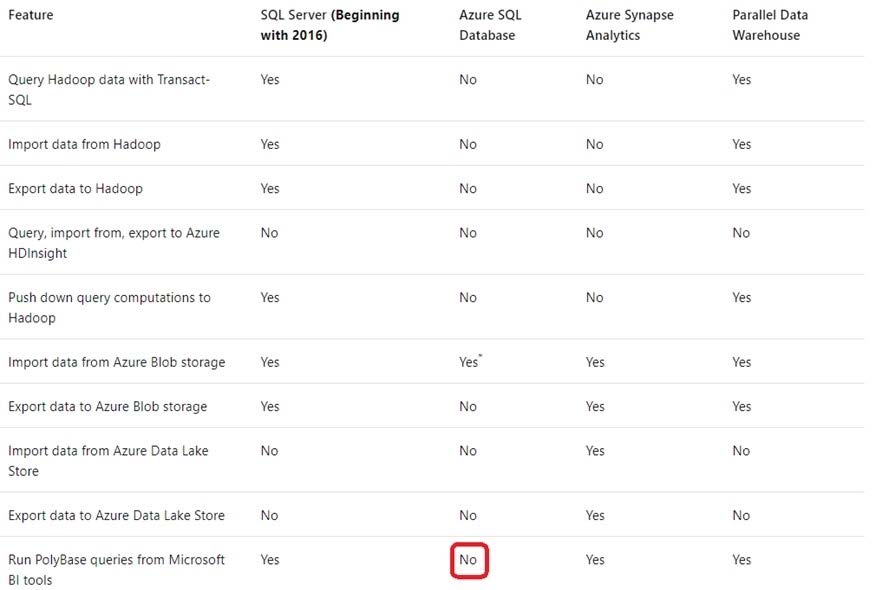

You have a Microsoft SQL Server 2019 database named DB1 that uses the following database-level and instance-level features.

1.

Clustered columnstore indexes

2.

Automatic tuning

3.

Change tracking

4.

PolyBase

You plan to migrate DB1 to an Azure SQL database.

What feature should be removed or replaced before DB1 can be migrated?

A. Clustered columnstore indexes

B. PolyBase

C. Change tracking

D. Automatic tuning

-

Question 114:

You have a Microsoft SQL Server 2019 instance in an on-premises datacenter. The instance contains a 4- TB database named DB1.

You plan to migrate DB1 to an Azure SQL Database managed instance.

What should you use to minimize downtime and data loss during the migration?

A. distributed availability groups

B. database mirroring

C. log shipping

D. Database Migration Assistant

-

Question 115:

You are designing a streaming data solution that will ingest variable volumes of data.

You need to ensure that you can change the partition count after creation.

Which service should you use to ingest the data?

A. Azure Event Hubs Standard

B. Azure Stream Analytics

C. Azure Data Factory

D. Azure Event Hubs Dedicated

-

Question 116:

You have an Azure Synapse Analytics Apache Spark pool named Pool1.

You plan to load JSON files from an Azure Data Lake Storage Gen2 container into the tables in Pool1. The structure and data types vary by file.

You need to load the files into the tables. The solution must maintain the source data types.

What should you do?

A. Load the data by using PySpark.

B. Load the data by using the OPENROWSET Transact-SQL command in an Azure Synapse Analytics serverless SQL pool.

C. Use a Get Metadata activity in Azure Data Factory.

D. Use a Conditional Split transformation in an Azure Synapse data flow.

-

Question 117:

You have 20 Azure SQL databases provisioned by using the vCore purchasing model.

You plan to create an Azure SQL Database elastic pool and add the 20 databases.

Which three metrics should you use to size the elastic pool to meet the demands of your workload? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. total size of all the databases

B. geo-replication support

C. number of concurrently peaking databases * peak CPU utilization per database

D. maximum number of concurrent sessions for all the databases

E. total number of databases * average CPU utilization per database

-

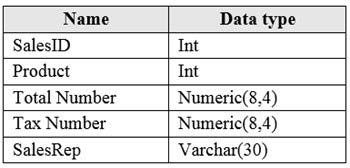

Question 118:

You have an Azure SQL database that contains a table named factSales. FactSales contains the columns shown in the following table.

FactSales has 6 billion rows and is loaded nightly by using a batch process.

Which type of compression provides the greatest space reduction for the database?

A. page compression

B. row compression

C. columnstore compression

D. columnstore archival compression

-

Question 119:

You are designing an enterprise data warehouse in Azure Synapse Analytics that will contain a table named Customers. Customers will contain credit card information.

You need to recommend a solution to provide salespeople with the ability to view all the entries in Customers. The solution must prevent all the salespeople from viewing or inferring the credit card information.

What should you include in the recommendation?

A. row-level security

B. data masking

C. Always Encrypted

D. column-level security

-

Question 120:

You have a data warehouse in Azure Synapse Analytics.

You need to ensure that the data in the data warehouse is encrypted at rest.

What should you enable?

A. Transparent Data Encryption (TDE)

B. Advanced Data Security for this database

C. Always Encrypted for all columns

D. Secure transfer required

Related Exams:

62-193

Technology Literacy for Educators70-243

Administering and Deploying System Center 2012 Configuration Manager70-355

Universal Windows Platform – App Data, Services, and Coding Patterns77-420

Excel 201377-427

Excel 2013 Expert Part One77-725

Word 2016 Core Document Creation, Collaboration and Communication77-726

Word 2016 Expert Creating Documents for Effective Communication77-727

Excel 2016 Core Data Analysis, Manipulation, and Presentation77-728

Excel 2016 Expert: Interpreting Data for Insights77-731

Outlook 2016 Core Communication, Collaboration and Email Skills

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Microsoft exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DP-300 exam preparations and Microsoft certification application, do not hesitate to visit our Vcedump.com to find your solutions here.