Exam Details

Exam Code

:DP-203Exam Name

:Data Engineering on Microsoft AzureCertification

:Microsoft CertificationsVendor

:MicrosoftTotal Questions

:398 Q&AsLast Updated

:Apr 15, 2025

Microsoft Microsoft Certifications DP-203 Questions & Answers

-

Question 161:

You are designing a folder structure for the files m an Azure Data Lake Storage Gen2 account. The account has one container that contains three years of data. You need to recommend a folder structure that meets the following requirements:

1.

Supports partition elimination for queries by Azure Synapse Analytics serverless SQL pooh

2.

Supports fast data retrieval for data from the current month

3.

Simplifies data security management by department Which folder structure should you recommend?

A. \Department\DataSource\YYYY\MM\DataFile_YYYYMMDD.parquet

B. \DataSource\Department\YYYYMM\DataFile_YYYYMMDD.parquet

C. \DD\MM\YYYY\Department\DataSource\DataFile_DDMMYY.parquet

D. \YYYY\MM\DD\Department\DataSource\DataFile_YYYYMMDD.parquet

-

Question 162:

You have an Azure Databricks workspace and an Azure Data Lake Storage Gen2 account named storage!

New files are uploaded daily to storage1.

Incrementally process new files as they are upkorage1 as a structured streaming source. The solution must meet the following requirements:

Minimize implementation and maintenance effort.

Minimize the cost of processing millions of files.

Support schema inference and schema drift.

Which should you include in the recommendation?

A. Auto Loader

B. Apache Spark FileStreamSource

C. COPY INTO

D. Azure Data Factory

-

Question 163:

You have the following Azure Data Factory pipelines

ingest Data from System 1 Ingest Data from System2 Populate Dimensions Populate facts

ingest Data from System1 and Ingest Data from System1 have no dependencies. Populate Dimensions must execute after Ingest Data from System1 and Ingest Data from System* Populate Facts must execute after the Populate Dimensions pipeline. All the pipelines must execute every eight hours.

What should you do to schedule the pipelines for execution?

A. Add an event trigger to all four pipelines.

B. Create a parent pipeline that contains the four pipelines and use an event trigger.

C. Create a parent pipeline that contains the four pipelines and use a schedule trigger.

D. Add a schedule trigger to all four pipelines.

-

Question 164:

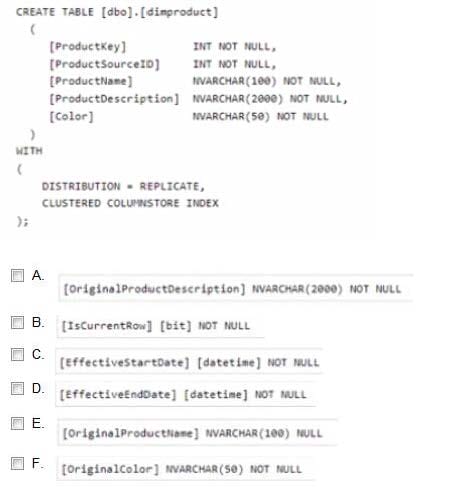

You are implementing a star schema in an Azure Synapse Analytics dedicated SQL pool.

You plan to create a table named DimProduct.

DimProduct must be a Type 3 slowly changing dimension (SCO) table that meets the following requirements:

The values in two columns named ProductKey and ProductSourceID will remain the same.

The values in three columns named ProductName, ProductDescription, and Color can change.

You need to add additional columns to complete the following table definition.

A. Option A

B. Option B

C. Option C

D. Option D

E. Option E

F. Option F

-

Question 165:

You have an Azure Synapse Analytics dedicated SQL pool named Pool1 and a database named DB1. DB1 contains a fact table named Table1.

You need to identify the extent of the data skew in Table1.

What should you do in Synapse Studio?

A. Connect to the built-in pool and query sysdm_pdw_sys_info.

B. Connect to Pool1 and run DBCC CHECKALLOC.

C. Connect to the built-in pool and run DBCC CHECKALLOC.

D. Connect to Pool! and query sys.dm_pdw_nodes_db_partition_stats.

-

Question 166:

You are designing a star schema for a dataset that contains records of online orders. Each record includes an order date, an order due date, and an order ship date.

You need to ensure that the design provides the fastest query times of the records when querying for arbitrary date ranges and aggregating by fiscal calendar attributes.

Which of following two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Use built in SQL functions to extract date attributes.

B. In the fact table, use integer columns for the date fields.

C. Use DateTime columns for the date fields.

D. Create a date dimension table that has an integer key in the format of yyyymmdd.

-

Question 167:

You have an Azure Data Lake Storage Gen2 account that contains two folders named Folder and Folder2.

You use Azure Data Factory to copy multiple files from Folder1 to Folder2.

You receive the following error.

Operation on target Copy_sks failed: Failure happened on 'Sink' side.

ErrorCode=DelimitedTextMoreColumnsThanDefined,

'Type=Microsoft.DataTransfer.Common.Snared.HybridDeliveryException,

Message=Error found when processing 'Csv/Tsv Format Text' source

'0_2020_11_09_11_43_32.avro' with row number 53: found more columns than expected column count 27., Source=Microsoft.DataTransfer.Comnon,'

What should you do to resolve the error?

A. Add an explicit mapping.

B. Enable fault tolerance to skip incompatible rows.

C. Lower the degree of copy parallelism

D. Change the Copy activity setting to Binary Copy

-

Question 168:

You have an Azure subscription that contains an Azure Synapse Analytics dedicated SQL pool named SQLPool1.

SQLPool1 is currently paused.

You need to restore the current state of SQLPool1 to a new SQL pool.

What should you do first?

A. Create a workspace.

B. Create a user-defined restore point.

C. Resume SQLPool1.

D. Create a new SQL pool.

-

Question 169:

You have an Azure Synapse Analytics dedicated SQL pool named pool1.

You need to perform a monthly audit of SQL statements that affect sensitive data. The solution must minimize administrative effort.

What should you include in the solution?

A. Microsoft Defender for SQL

B. dynamic data masking

C. sensitivity labels

D. workload management

-

Question 170:

You have an Azure subscription that contains an Azure Data Lake Storage account named myaccount1. The myaccount1 account contains two containers named container1 and contained. The subscription is linked to an Azure Active Directory (Azure AD) tenant that contains a security group named Group1.

You need to grant Group1 read access to contamer1. The solution must use the principle of least privilege. Which role should you assign to Group1?

A. Storage Blob Data Reader for container1

B. Storage Table Data Reader for container1

C. Storage Blob Data Reader for myaccount1

D. Storage Table Data Reader for myaccount1

Related Exams:

62-193

Technology Literacy for Educators70-243

Administering and Deploying System Center 2012 Configuration Manager70-355

Universal Windows Platform – App Data, Services, and Coding Patterns77-420

Excel 201377-427

Excel 2013 Expert Part One77-725

Word 2016 Core Document Creation, Collaboration and Communication77-726

Word 2016 Expert Creating Documents for Effective Communication77-727

Excel 2016 Core Data Analysis, Manipulation, and Presentation77-728

Excel 2016 Expert: Interpreting Data for Insights77-731

Outlook 2016 Core Communication, Collaboration and Email Skills

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Microsoft exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DP-203 exam preparations and Microsoft certification application, do not hesitate to visit our Vcedump.com to find your solutions here.