Exam Details

Exam Code

:1Z0-117Exam Name

:Oracle Database 11g Release 2: SQL Tuning ExamCertification

:Oracle CertificationsVendor

:OracleTotal Questions

:125 Q&AsLast Updated

:Mar 26, 2025

Oracle Oracle Certifications 1Z0-117 Questions & Answers

-

Question 81:

Which two are the fastest methods for fetching a single row from a table based on an equality predicate?

A. Fast full index scan on an index created for a column with unique key

B. Index unique scan on an created for a column with unique key

C. Row fetch from a single table hash cluster

D. Index range scan on an index created from a column with primary key

E. Row fetch from a table using rowid

-

Question 82:

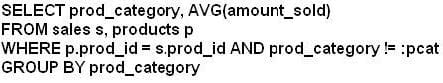

An application user complains about statement execution taking longer than usual. You find that the query uses a bind variable in the WHERE clause as follows:

You want to view the execution plan of the query that takes into account the value in the bind variable PCAT. Which two methods can you use to view the required execution plan?

A. Use the DBMS_XPLAN.DISPLAY function to view the execution plan.

B. Identify the SQL_ID for the statementsand use DBMS_XPLAN.DISPLAY_CURSOR for that SQL_ID to view the execution plan.

C. Identify the SQL_ID for the statement and fetch the execution plan PLAN_TABLE.

D. View the execution plan for the statement from V$SQL_PLAN.

E. Execute the statement with different bind values and set AUTOTRACE enabled for session.

-

Question 83:

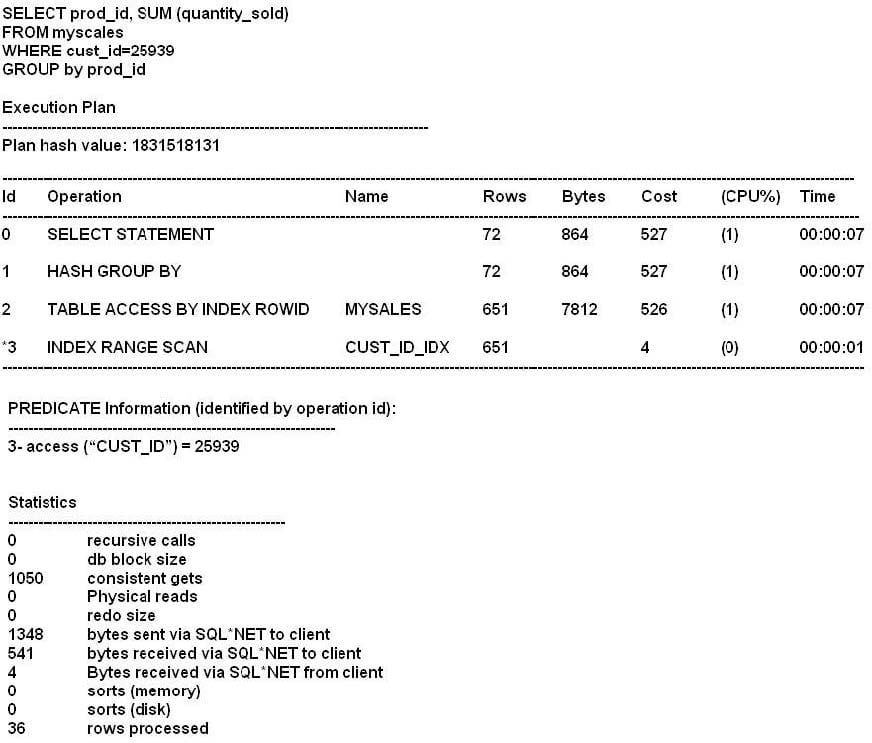

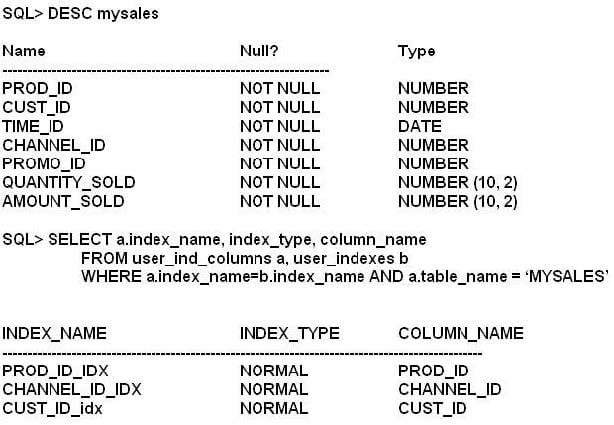

You are administering a database supporting an OLTP application. The application runs a series of extremely similar queries the MYSALES table where the value of CUST_ID changes.

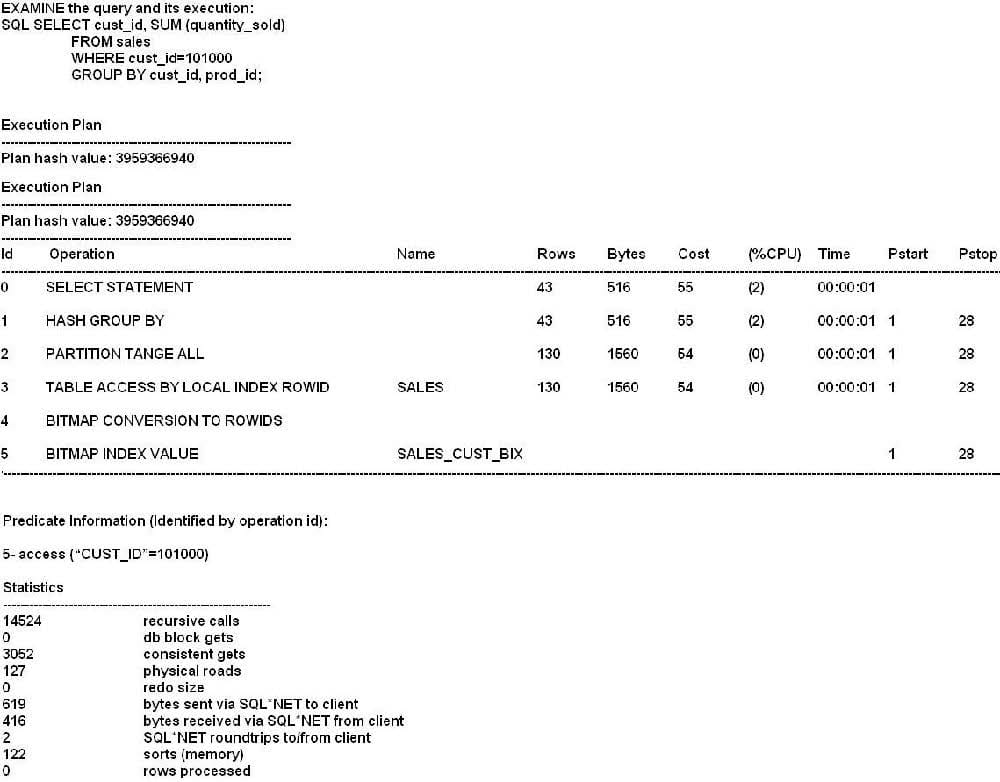

Examine Exhibit1 to view the query and its execution plan.

Examine Exhibit 2 to view the structure and indexes for the MYSALES table. The MYSALES table has 4 million records.

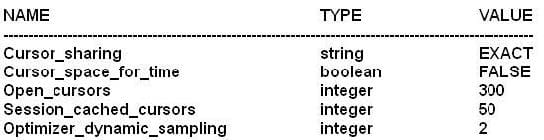

Data in the CUST_ID column is highly skewed. Examine the parameters set for the instance:

Which action would you like to make the query use the best plan for the selectivity?

A. Decrease the value of the OPTIMIZER_DYNAMIC_SAMPLING parameter to 0.

B. Us the /*+ INDEX(CUST_ID_IDX) */ hint in the query.

C. Drop the existing B* -tree index and re-create it as a bitmapped index on the CUST_ID column.

D. Collect histogram statistics for the CUST_ID column and use a bind variable instead of literal values.

-

Question 84:

Which three are tasks performed in the hard parse stage of a SQL statement executions?

A. Semantics of the SQL statement are checked.

B. The library cache is checked to find whether an existing statement has the same hash value.

C. The syntax of the SQL statement is checked.

D. Information about location, size, and data type is defined, which is required to store fetched values in variables.

E. Locks are acquired on the required objects.

-

Question 85:

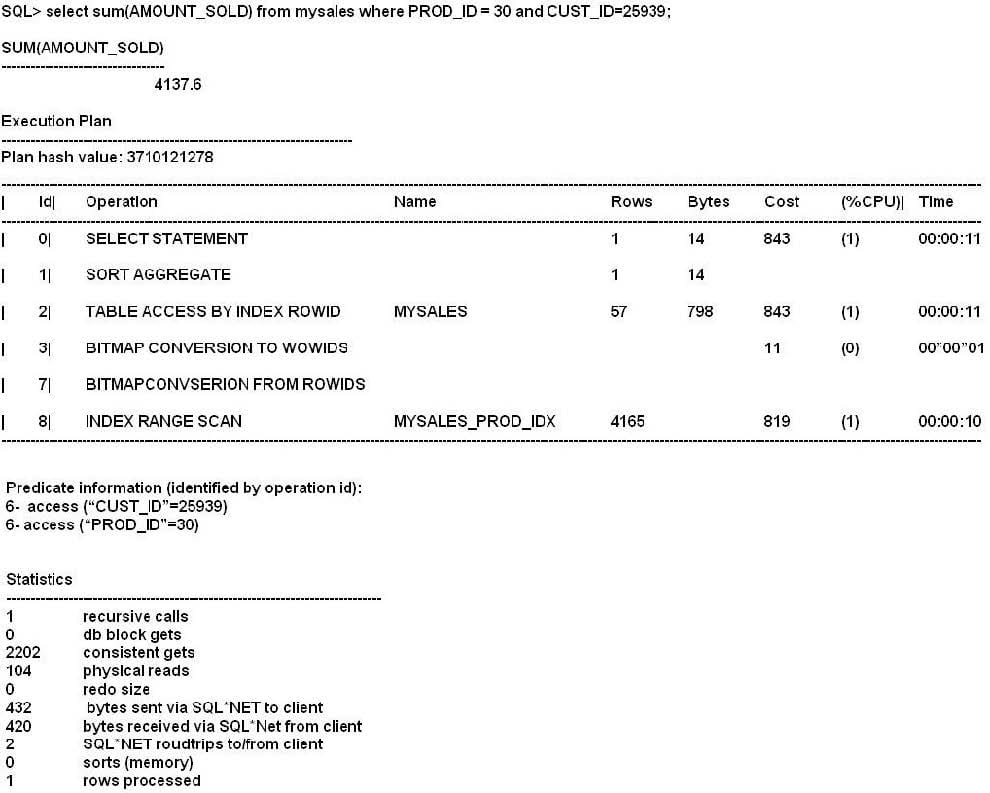

Examine the Exhibit to view the structure of an indexes for the SALES table.

The SALES table has 4594215 rows. The CUST_ID column has 2079 distinct values. What would you do to influence the optimizer for better selectivity?

A. Drop bitmap index and create balanced B*Tree index on the CUST_ID column.

B. Create a height-balanced histogram for the CUST_ID column.

C. Gather statistics for the indexes on the SALES table.

D. Use the ALL_ROWS hint in the query.

-

Question 86:

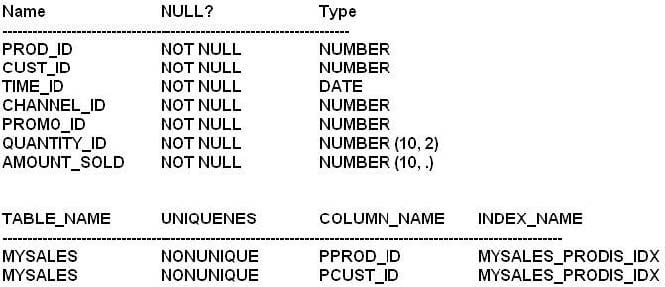

View Exhibit1 and examine the structure and indexes for the MYSALES table.

The application uses the MYSALES table to insert sales record. But this table is also extensively used for generating sales reports. The PROD_ID and CUST_ID columns are frequently used in the WHERE clause of the queries. These columns have few distinct values relative to the total number of rows in the table. The MYSALES table has 4.5 million rows.

View exhibit 2 and examine one of the queries and its autotrace output.

Which two methods can examine one of the queries and its autotrace output?

A. Drop the current standard balanced B* Tree indexes on the CUST_ID and PROD_ID columns and re-create as bitmapped indexes.

B. Use the INDEX_COMBINE hint in the query.

C. Create a composite index involving the CUST_ID and PROD_ID columns.

D. Rebuild the index to rearrange the index blocks to have more rows per block by decreasing the value for PCTFRE attribute.

E. Collect histogram statistics for the CUST_ID and PROD_ID columns.

-

Question 87:

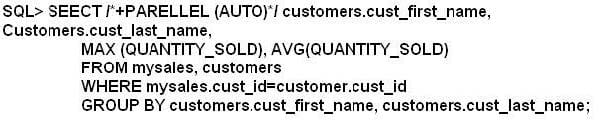

You enabled auto degree of parallelism (DOP) for your instance.

Examine the query:

Which two are true about the execution of this query?

A. Dictionary DOP will be used, if present, on the tables referred in the query.

B. DOP is calculated if the calculated DOP is 1.

C. DOP is calculated automatically.

D. Calculated DOP will always by 2 or more.

E. The statement will execute with auto DOP only when PARALLEL_DEGREE_POLICY is set to AUTO.

-

Question 88:

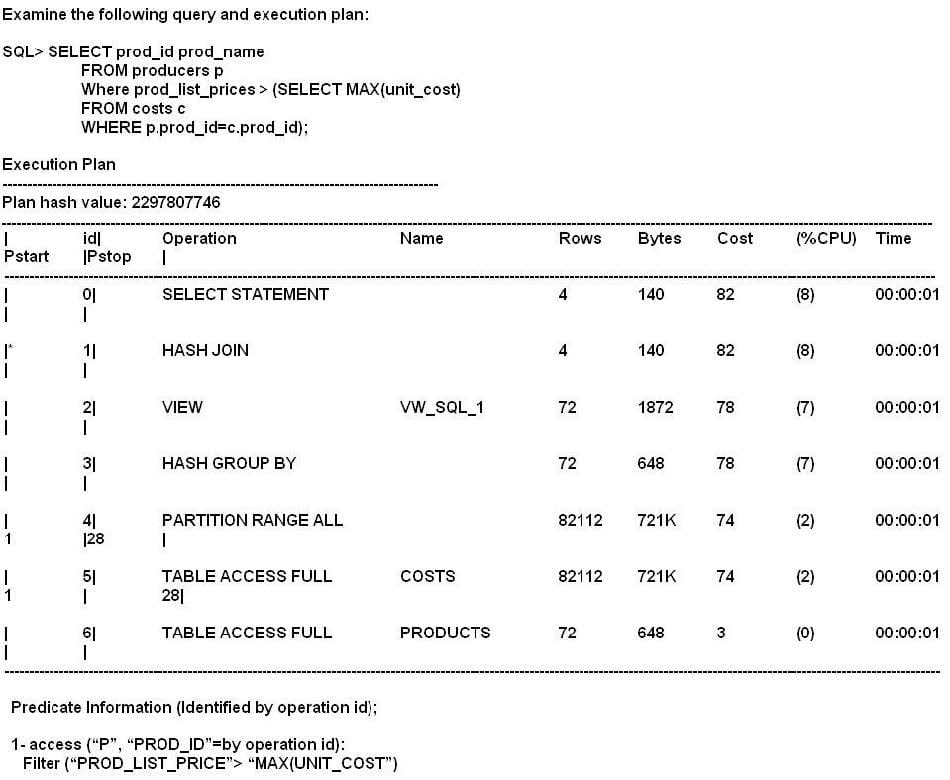

Examine the following query and execution plan: Which query transformation technique is used in this scenario?

A. Join predicate push-down

B. Subquery factoring

C. Subquery unnesting

D. Join conversion

-

Question 89:

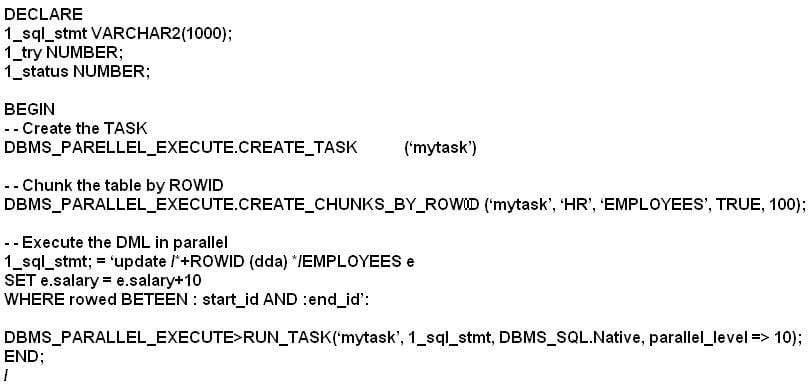

Examine the following anonymous PL/SQL code block of code:

Which two are true concerning the use of this code?

A. The user executing the anonymous PL/SQL code must have the CREATE JOB system privilege.

B. ALTER SESSION ENABLE PARALLEL DML must be executed in the session prior to executing the anonymous PL/SQL code.

C. All chunks are committed together once all tasks updating all chunks are finished.

D. The user executing the anonymous PL/SQL code requires execute privilege on the DBMS_JOB package.

E. The user executing the anonymous PL/SQL code requires privilege on the DBMS_SCHEDULER package.

F. Each chunk will be committed independently as soon as the task updating that chunk is finished.

-

Question 90:

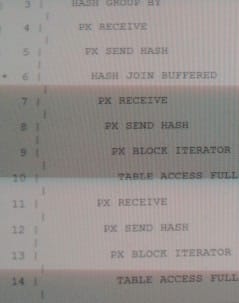

Examine the exhibit to view the query and its execution plan?

What two statements are true?

A. The HASH GROUP BY operation is the consumer of the HASH operation.

B. The HASH operation is the consumer of the HASH GROUP BY operation.

C. The HASH GROUP BY operation is the consumer of the TABLE ACCESS FULL operation for the CUSTOMER table.

D. The HASH GROUP BY operation is consumer of the TABLE ACCESS FULL operation for the SALES table.

E. The SALES table scan is a producer for the HASH JOIN operation.

Related Exams:

1Z0-020

Oracle8i: New Features for Administrators1Z0-023

Architecture and Administration1Z0-024

Performance Tuning1Z0-025

Backup and Recovery1Z0-026

Network Administration1Z0-034

Upgrade Oracle9i/10g OCA to Oracle Database OCP1Z0-036

Managing Oracle9i on Linux1Z0-041

Oracle Database 10g: DBA Assessment1Z0-052

Oracle Database 11g: Administration Workshop I1Z0-053

Oracle Database 11g: Administration II

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Oracle exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your 1Z0-117 exam preparations and Oracle certification application, do not hesitate to visit our Vcedump.com to find your solutions here.